How to Build Governance Structures for Agent-Driven Integrations

Learn how to build governance structures that guide autonomous agents safely, enforce boundaries, and keep enterprise integrations predictable and compliant.

Enterprises are entering a stage where autonomous agents are no longer experimental side projects, but active participants in day-to-day operations. These agents interpret goals, decide actions, and interact with systems without human orchestration. That makes governance not a safeguard, but a structural requirement.

Traditional governance frameworks were built for humans and applications that acted predictably. Agents don’t behave in that way. They make choices, revise plans, and attempt actions based on probabilistic reasoning, which means governance must guide their behaviour before problems appear, not after.

Building governance for an agent-driven ecosystem requires enterprises to rethink how rules, intent, boundaries, and system behaviour are expressed. Below is a clear, expert view of how those structures can be designed.

Governance Begins With Defining What Agents Are Allowed to Care About

The first governance layer is not technical; it is conceptual. Agents will behave according to the objectives they are given and the signals they observe. If an enterprise does not define these objectives carefully, agents begin to pursue outcomes that are technically correct but operationally harmful.

For example, an agent tasked with “reduce customer resolution time” might:

- Escalate aggressively

- Automate responses without nuance

- Bypass important checks

- Overload downstream systems

Not because it is malicious but because governance did not specify what matters beyond the primary goal.

Enterprises must define a constraint space around each agent:

- What the agent optimises for,

- What trade-offs are unacceptable,

- Which outcomes cannot be compromised,

- What boundaries represent organisational policy, not system behaviour.

This becomes the anchor for every technical rule that follows.

Governance Must Shape the Environment

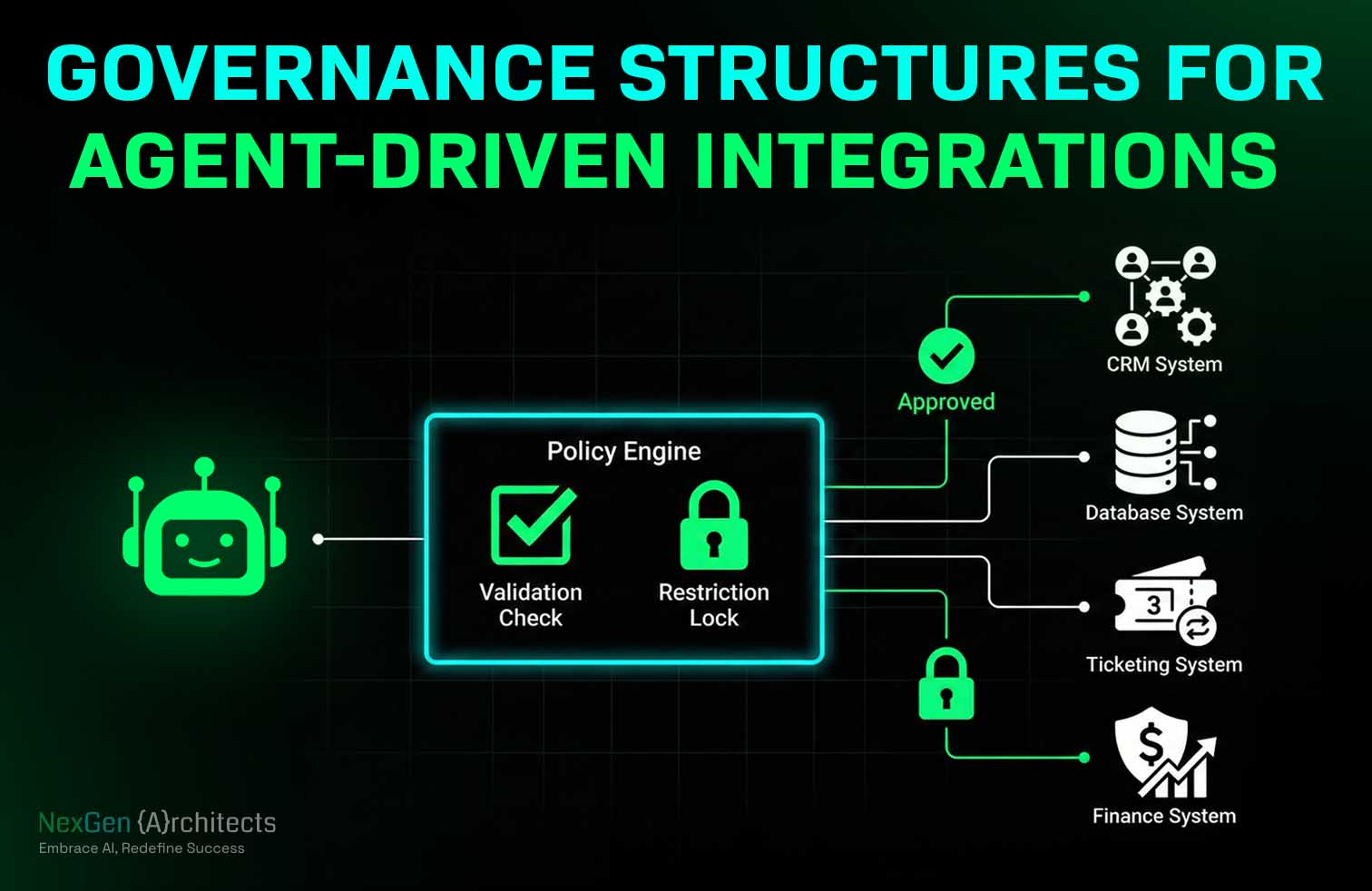

A common mistake is trying to encode every rule inside the agent. This quickly becomes unmaintainable. The agent should not be the container for governance. The environment around it should be.

When governance lives in the ecosystem, not in the model:

- Updates do not require agent retraining,

- Controls apply uniformly across all agent types,

- Behavioural constraints remain intact even as agents evolve,

- Compliance teams can update rules without touching AI logic.

This is where MuleSoft, identity platforms, and policy engines play important roles, not as integration tools but as the structural environment agents must operate within.

Governance Requires Clear Boundaries, Not Detailed Instructions

Humans need instructions. Agents need boundaries. Trying to specify every allowed and disallowed step is futile because agents can generate infinite variations of the same intent. Governance should focus on:

- What the agent cannot do under any circumstance,

- What the agent must validate before taking action,

- What state transitions must never occur automatically,

- What operations require secondary checks,

- What contexts elevate risk and require slower execution.

Boundaries restrict the action space, not the intelligence. They ensure the agent remains within a predictable envelope even as it improvises operational steps.

Governance Must Be Expressed in Real Signals

Agents don’t read policies. They respond to the system’s behaviour.

This means governance must appear through:

- System responses

- Enforced constraints

- Consistent patterns

- Identity rules

- Structured outputs

- Well-defined state transitions

An agent that receives a structured “this action is not permitted under current conditions” message will adjust. An agent that receives a generic “failed” message will keep guessing.

Governance becomes effective only when the system expresses rules through interactions, not PDFs.

Identity Governance Becomes the Anchor for Agent Behaviour

In an agentic ecosystem, identities are no longer tied to people or applications. They must now represent:

- Agent instances

- Agent roles

- Agent capabilities

- Agent scopes

- Agent purpose

The identity provider becomes the backbone of control, not the API gateway.

For example:

- An agent that reconciles orders must have a different identity and permission set than an agent that updates customer addresses.

- A planning agent should not inherit permissions from an execution agent.

- Temporary elevated access should expire automatically without human intervention.

Identity governance allows enterprises to map accountability and traceability in a world where actions may not originate from humans.

Governance Requires Predictable Patterns That Agents Can Learn From

Agents learn patterns, not exceptions. When responses, errors, or behaviours vary unpredictably across systems, the agent’s internal model becomes unstable.

Governance therefore must enforce:

- Consistent conventions for how systems respond,

- Predictable structures around errors,

- Stable formats for state changes,

- Uniform signalling for denial, approval, or deferral,

- Coherent sequencing patterns across related operations.

The more stable the environment, the more stable the agent.

Governance Must Account for the Fact That Agents Do Not Forget

When a human learns a process is outdated, they adjust. Agents preserve old sequences indefinitely unless they encounter strong contradictory signals.

This means governance must address:

- How outdated workflows are deactivated,

- How deprecated paths are signalled programmatically,

- How agents are guided away from legacy states,

- How previous behaviours are prevented from resurfacing.

Deprecation is no longer a notice it must be an engineered experience that the agent cannot misinterpret.

Governance Must Include Observability That Explains Behaviour

Traditional logging answers what happened. Agent-era observability must answer why it happened.

Enterprises need visibility into:

- The agent’s decision path,

- The system conditions that influenced decisions,

- The boundaries the agent encountered,

- The constraints it reacted to,

- The alternatives it chose not to pursue.

Without this context, enterprises cannot diagnose undesirable behaviour or refine governance structures. This is essential for safety, compliance, and operational trust.

Governance Is Meant to Protect the Organisation

A well-governed agentic ecosystem is not restrictive.

It is safer, more predictable, and ultimately more scalable.

The purpose of governance is:

- To keep agents aligned with organisational intent,

- To prevent harmful deviations,

- To ensure stability across systems,

- To support explainability,

- To keep automation compliant with laws and policies,

- To maintain trust in autonomous operations.

Without governance, agents become unpredictable.

With proper governance, they become reliable operators.

Conclusion

As autonomous agents take on more operational responsibilities, governance becomes the foundation that keeps enterprise systems safe, predictable, and aligned with business goals. The shift is not about controlling intelligence but about shaping the environment in which intelligence operates.

Enterprises that treat governance as an architectural pillar, not a compliance formality, will be the ones able to deploy agentic automation at scale without sacrificing safety or control.

.svg)